Tune in for a free, live virtual hands-on lab...

Mastering Data Vault 2.0 Webinar Recap

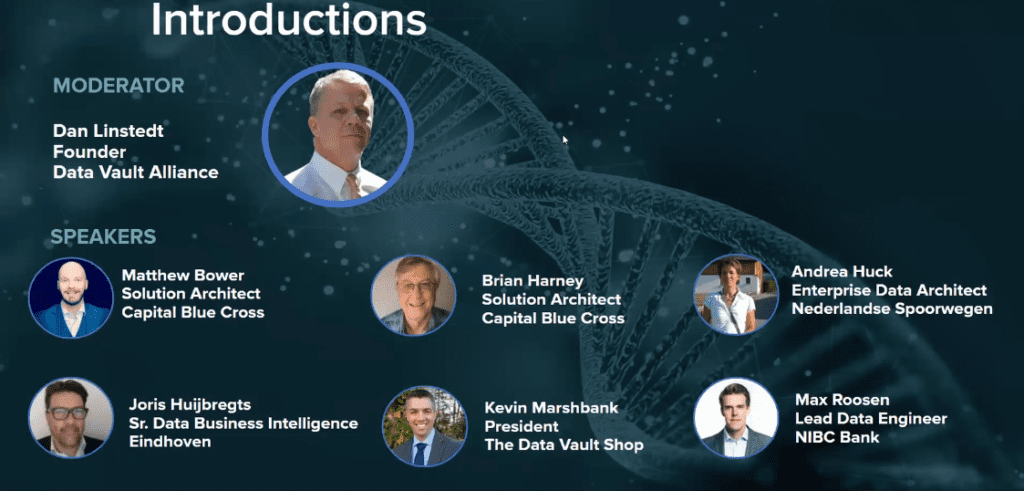

The “Mastering Data Vault 2.0: Insights from Pioneers and Practitioners” webinar, moderated by Dan Linstedt, Founder of Data Vault Alliance, brought together an esteemed panel of experts.

The session included Matthew Bower and Brian Harney, Solution Architects at Capital Blue Cross; Andrea Huck, Enterprise Data Architect at Nederlandse Spoorwegen; Joris Huijbregts, a Senior Data Business Intelligence professional from Eindhoven; Kevin Marshbank, President of The Data Vault Shop; and Max Roosen, Lead Data Engineer at NIBC Bank.

Each speaker shared their unique experiences and insights into Data Vault 2.0 methodology. This blog recaps the key discussions, insights, Q&A sessions, and expert opinions presented during the webinar.

Implementing Data Vault 2.0

Patrick O’Halloran kicked off the webinar with an introduction of the distinguished panelists, setting the stage for an enlightening discussion on the evolution and benefits of Data Vault 2.0. The speakers shared their insights and experiences, illustrating the clear contrast between traditional data warehousing methods and Data Vault 2.0. Throughout the discussions, a recurring theme was the remarkable scalability offered by the Data Vault 2.0 methodology.

Matthew Bower from Capital Blue Cross vividly described this benefit: “Our engineers are in there expanding the vault with our corporate data sources… we could keep everything virtualized on top of the vault, blow it up, no worries, and do it again.” This quote not only highlighted the flexibility and ease of expansion inherent in Data Vault 2.0 but also underscored its superiority over more rigid traditional systems.

Echoing this sentiment, Andrea Huck from Nederlandse Spoorwegen further emphasized the transformative nature of Data Vault 2.0, stating, “We chose Data Vault for its scalability and ability to adapt quickly to new data sources, which is crucial in our rapidly evolving data landscape.” Her statement reinforced the methodology’s exceptional adaptability, a critical advantage in today’s dynamic data management environment.

Adaptability and Auditability of Data Vault 2.0

Furthermore, the adaptability of Data Vault 2.0 was a major point of discussion. Speakers noted how this methodology allows for a more agile response to changing business needs and data structures, which is especially crucial in today’s fast-paced business environment. The improved auditability of Data Vault 2.0 was also emphasized, with its inherent structure facilitating better change data capture and auditability. This aspect is particularly advantageous for businesses needing to track and understand data changes over time, ensuring compliance and data integrity.

Data Vault Automation

A significant emphasis was placed on the role of automation in the successful implementation of Data Vault 2.0. The speakers highlighted the importance of tools like WhereScape, which automate many of the repetitive tasks in Data Vault implementation, thus speeding up the development process while ensuring accuracy and standardization. Brian Harney from Capital Blue Cross explains, “It’s made it a lot easier for that whole process… you recompile it and it’s ready to go.” encapsulating the efficiency and ease brought about by automation in the Data Vault 2.0 process, marking a significant improvement over manual methods.

Advanced Topics and Future Directions: The Importance of Version Control and Governance

Dan Linstedt continues to emphasize the criticality of version control and governance in the realm of Data Vault. He advocated for dedicated oversight to ensure standardization and global applicability of Data Vault templates. Linstedt’s assertion underlines the need for consistency and quality control in Data Vault practices, a necessary step for its broader acceptance and effectiveness. Reflecting on this need for structured management, Linstedt remarked, “It’s extremely important that everyone globally follows the same production standards and uses the same templates.”, highlighting the necessity for consistency and quality control in Data Vault practices, underlining it as a crucial step for its broader acceptance and effectiveness.

Integrating AI in Data Vault Processes

A significant part of the discussion revolved around the integration of AI into Data Vault processes. The use of AI, particularly ChatGPT for generating Python templates and APIs, was highlighted as a game-changer for speeding up development. Linstedt remarked on the potential of AI, “It doesn’t make me smarter; it makes me faster.”, capturing the essence of how AI can enhance the efficiency of Data Vault methodologies, providing quicker solutions without compromising the intelligence and integrity of the process.

Joris Huijbregts from Eindhoven shared his experience, emphasizing the efficiency brought by these technologies: “Agree to this and in combination with WhereScape is what we did was ask ChatGPT to write the API code to extract data from a source. So even that part, writing an API to make a specific extract from the source, was fed up tremendously. And after that, you have the data and you can just run it through which makes onboarding of new data even faster.” This insight highlights how AI and automation technologies, like writing API codes, can significantly speed up data integration and management processes in Data Vault 2.0.

The Future Role of AI in Data Vault

The future role of AI in Data Vault was likened to the evolution of Wikipedia. Initially seen as a secondary source, Wikipedia gradually gained credibility to become a primary, reliable resource. This analogy suggests the growing significance and dependability of AI in Data Vault, envisioning a future where AI’s contributions are foundational to data management practices.

Data Vault 2.1 and Professional Learning Opportunities

The introduction of Data Vault 2.1 constituted a significant part of the webinar. Linstedt expanded on its scope, which now includes contemporary data management concepts like data lakes, data mesh, and strategies for handling unstructured data. His announcement of upcoming courses and beta classes for Data Vault 2.1 is a call to action for professionals to engage with and learn from these new materials.

Final Thoughts and Call to Action: Looking Ahead to Data Vault 2.1

As the webinar neared its conclusion, there was a collective emphasis on the benefits and successes of Data Vault 2.0, coupled with excitement for the upcoming release of Data Vault 2.1. The speakers uniformly emphasized the robustness of Data Vault 2.0 in managing complex data environments and its readiness to embrace AI and other emerging technologies.

The adaptability of Data Vault 2.0 in various business scenarios was a key takeaway. Matthew Bower from Capital Blue Cross highlighted this aspect, saying, “Data Vault allows us to touch every single data domain in our business, bringing in a level of flexibility we didn’t have before.” This statement underscored the methodology’s capability to handle diverse and complex data structures.

Embracing AI and Future Technologies in Data Vault 2.0

The webinar delved into the significant role of AI and automation in enhancing Data Vault 2.0. Discussions centered on how these advanced technologies are being integrated into the methodology to streamline processes and open up new possibilities for data management.

This integration signifies a leap in the evolution of Data Vault, moving it beyond traditional data handling methods and positioning it at the forefront of technological innovation in data management. The conversations made it clear that AI and automation are not just additional features but essential components that are reshaping the way Data Vault 2.0 operates in today’s fast-paced, data-driven world.

Anticipating Data Vault 2.1: Expanding Scope and Capabilities

Dan Linstedt’s discussion on Data Vault 2.1 stirred significant interest, highlighting its expanded scope to include modern data concepts like data lakes and data mesh. “With Data Vault 2.1, we are not just evolving; we are revolutionizing the way data can be managed and utilized,” he stated, pointing to the potential transformative impact of the upcoming version.

Q&A Session Recap: Detailed Insights from ‘Mastering Data Vault 2.0’ Webinar

During the webinar, attendees posed various questions to the panelists, focusing on specific challenges, implementations, and the future of Data Vault 2.0. Here’s a detailed overview of the Q&A session:

- Building Data Vault from NoSQL Databases

- Question: Can Data Vault be implemented with NoSQL databases?

- Answer: Dan Linstedt explained that the feasibility depends on the type of NoSQL database. The physical model of Data Vault might need adaptation for specific platforms like Cassandra or MongoDB. The key is to modify the physical model (e.g., flattening data vault objects) for performance optimization without compromising Data Vault standards.

- Integration of AI in Data Vault Processes

- Question: How can AI, specifically ChatGPT, be integrated into Data Vault methodologies?

- Answer: The use of AI tools like ChatGPT for generating Python templates and API codes was discussed. It was noted that while AI can significantly speed up development, the outputs need to be carefully vetted. The integration of an AI interface in tools like WhereScape RED was also suggested to enhance agility and efficiency.

- Implementing SDMX in Data Vault

- Question: Is it possible to implement Statistical Data and Metadata Exchange (SDMX) with Data Vault?

- Answer: It was suggested that specific templates might be necessary for exporting data in SDMX format. The integration would likely require custom solutions tailored to the unique requirements of SDMX.

- Data Vault 2.1 Course and Certification

- Question: What are the details regarding the Data Vault 2.1 certification and courses?

- Answer: Dan Linstedt announced upcoming courses for Data Vault 2.1, including beta classes offering an interactive experience with new material. He mentioned that participants in these beta classes would be eligible for free enrollment in the full course and the 2.1 certification. The course would cover advanced topics like data lakes, data mesh, and unstructured data processing.

- Data Vault Model Scalability

- Question: How scalable is the Data Vault model, especially in terms of loading hubs, links, and satellites?

- Answer: The scalability of the Data Vault model allows for parallel processing and independent loading of hubs, links, and satellites. The model’s design ensures that changes in one part do not necessitate a complete overhaul, thus providing flexibility and scalability.

- Data Vault Implementation with Virtual Sources

- Question: How to manage logistics in virtual layers, such as views, in Data Vault?

- Answer: It was discussed that while the raw vault remains unchanged, views and other virtual layers built on top can be modified as needed. This flexibility allows for adjustments in response to evolving business requirements without affecting the underlying raw data structure.

- Future Directions for Data Vault

- Question: What are the future directions and advancements in Data Vault methodologies?

- Answer: The future of Data Vault includes more integration with AI and machine learning, as well as advancements in handling complex data types and structures, as evidenced by the upcoming Data Vault 2.1.

The Q&A session provided valuable insights into practical considerations, current challenges, and future advancements in Data Vault methodologies.

Harness Data Vault 2.0’s Potential with WhereScape

The “Mastering Data Vault 2.0” webinar provided a deep dive into the evolution, benefits, and future of Data Vault 2.0. Key highlights included its scalability, adaptability, enhanced auditability, and the significant role of automation. Insights from diverse experts underscored Data Vault 2.0’s effectiveness in complex data environments and its readiness for emerging technologies like AI.

The upcoming Data Vault 2.1, with its focus on modern data concepts, signals an exciting future for data management. For those keen to explore these insights further, watch the full webinar on-demand here.

If you’re ready to see WhereScape Data Vault 2.0 in action, schedule a personalized demo here. Embrace the future of data management with Data Vault 2.0 and stay ahead in the rapidly evolving world of data.

ETL vs ELT: What are the Differences?

In working with hundreds of data teams through WhereScape’s automation platform, we’ve seen this debate evolve as businesses modernize their infrastructure. Each method, ETL vs ELT, offers a unique pathway for transferring raw data into a warehouse, where it can be...

Dimensional Modeling for Machine Learning

Kimball’s dimensional modeling continues to play a critical role in machine learning and data science outcomes, as outlined in the Kimball Group’s 10 Essential Rules of Dimensional Modeling, a framework still widely applied in modern data workflows. In a recent...

Automating Data Vault in Databricks | WhereScape Recap

Automating Data Vault in Databricks can reduce time-to-value by up to 70%—and that’s why we hosted a recent WhereScape webinar to show exactly how. At WhereScape, modern data teams shouldn't have to choose between agility and governance. That's why we hosted a live...

WhereScape Recap: Highlights From Big Data & AI World London 2025

Big Data & AI World London 2025 brought together thousands of data and AI professionals at ExCeL London—and WhereScape was right in the middle of the action. With automation taking center stage across the industry, it was no surprise that our booth and sessions...

Why WhereScape is the Leading Solution for Healthcare Data Automation

Optimizing Healthcare Data Management with Automation Healthcare organizations manage vast amounts of medical data across EHR systems, billing platforms, clinical research, and operational analytics. However, healthcare data integration remains a challenge due to...

WhereScape Q&A: Your Top Questions Answered on Data Vault and Databricks

During our latest WhereScape webinar, attendees had fantastic questions about Data Vault 2.0, Databricks, and metadata automation. We’ve compiled the best questions and answers to help you understand how WhereScape streamlines data modeling, automation, and...

What is Data Fabric? A Smarter Way for Data Management

As of 2023, the global data fabric market was valued at $2.29 billion and is projected to grow to $12.91 billion by 2032, reflecting the critical role and rapid adoption of data fabric solutions in modern data management. The integration of data fabric solutions...

Want Better AI Data Management? Data Automation is the Answer

Understanding the AI Landscape Imagine losing 6% of your annual revenue—simply due to poor data quality. A recent survey found that underperforming AI models, built using low-quality or inaccurate data, cost companies an average of $406 million annually. Artificial...

RED 10: The ‘Git Friendly’ Revolution for CI/CD in Data Warehousing

For years, WhereScape RED has been the engine that powers rapidly built and high performance data warehouses. And while RED 10 has quietly empowered organizations since its launch in 2023, our latest 10.4 release is a game changer. We have dubbed this landmark update...

The Assembly Line for Your Data: How Automation Transforms Data Projects

Imagine an old-fashioned assembly line. Workers pass components down the line, each adding their own piece. It’s repetitive, prone to errors, and can grind to a halt if one person falls behind. Now, picture the modern version—robots assembling products with speed,...

Related Content

ETL vs ELT: What are the Differences?

In working with hundreds of data teams through WhereScape’s automation platform, we’ve seen this debate evolve as businesses modernize their infrastructure. Each method, ETL vs ELT, offers a unique pathway for transferring raw data into a warehouse, where it can be...

Dimensional Modeling for Machine Learning

Kimball’s dimensional modeling continues to play a critical role in machine learning and data science outcomes, as outlined in the Kimball Group’s 10 Essential Rules of Dimensional Modeling, a framework still widely applied in modern data workflows. In a recent...

Automating Data Vault in Databricks | WhereScape Recap

Automating Data Vault in Databricks can reduce time-to-value by up to 70%—and that’s why we hosted a recent WhereScape webinar to show exactly how. At WhereScape, modern data teams shouldn't have to choose between agility and governance. That's why we hosted a live...

WhereScape Recap: Highlights From Big Data & AI World London 2025

Big Data & AI World London 2025 brought together thousands of data and AI professionals at ExCeL London—and WhereScape was right in the middle of the action. With automation taking center stage across the industry, it was no surprise that our booth and sessions...